This project involved various VR prototypes I made with unity and the VRTK plugin for animations-and-more. Here are the three prototypes that have been created:

1. Look-To-Click

The goal of this prototype was to find a way of navigating through the scene without using a mouse or controller. Because this solution is hands-free, this would be really beneficial for budget VR headsets like cardboard and also for AR apps.

We quickly realized that others already found a solution for this and implemented our own version: it tracks how long the player looks at an object and can trigger something accordingly. Here is an example that shows this simple concept:

The next step was to build 360° video spheres that the player could use as teleport locations, but during development another project came up, and so the work on prototype #2 began.

2. Model Presenter

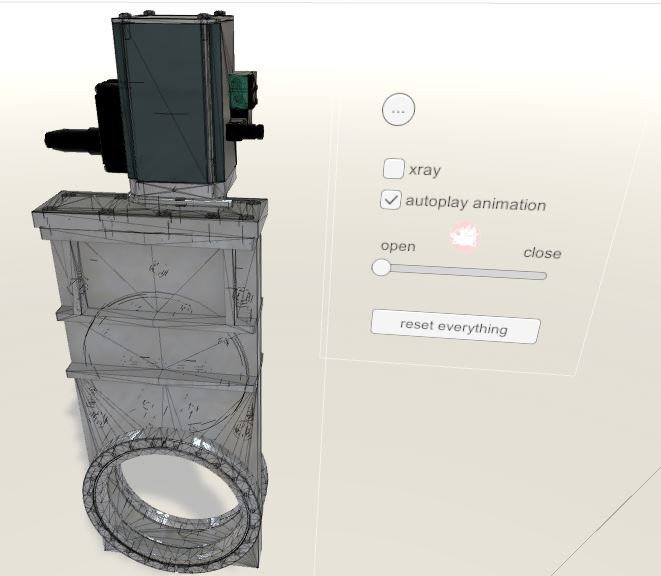

Many of animations & more's customers have products they want to showcase at events, and VR is always a plus to lure customers to your booth. For this prototype it was important to modify a 3D model in an intuitive way, we achieved this by using simple gestures that you would also use in the real world to move, rotate or scale an object.

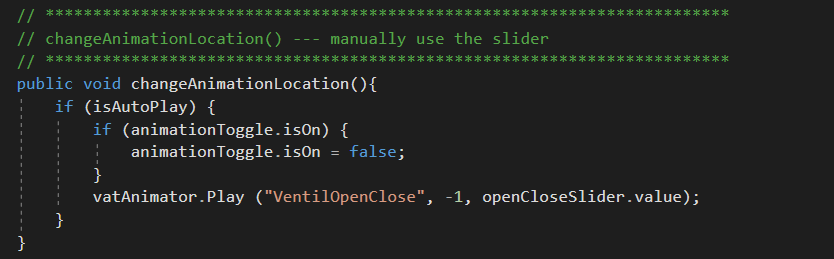

This was later refined with an additional interface to play animations, change view types or other options.

The coding part for the interface was really simple, it was much more tricky to get the "feeling" of rotating or scaling the object right. How much should it scale or rotate? This required iteration & testing with different people on our team.

One of the cool things you can do with this system is creating scenarios that would normally not be possible, like shrinking the character to a size that makes it possible to literally walk through the object itself:

3. Assembly Line

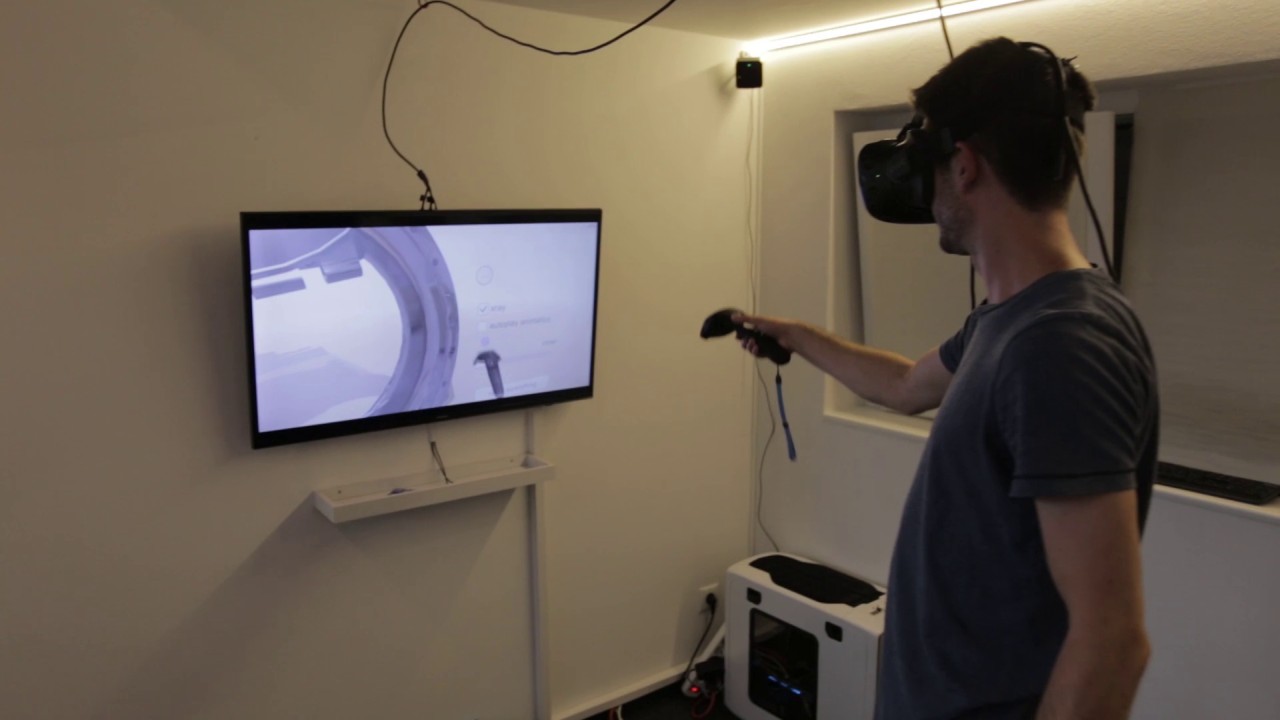

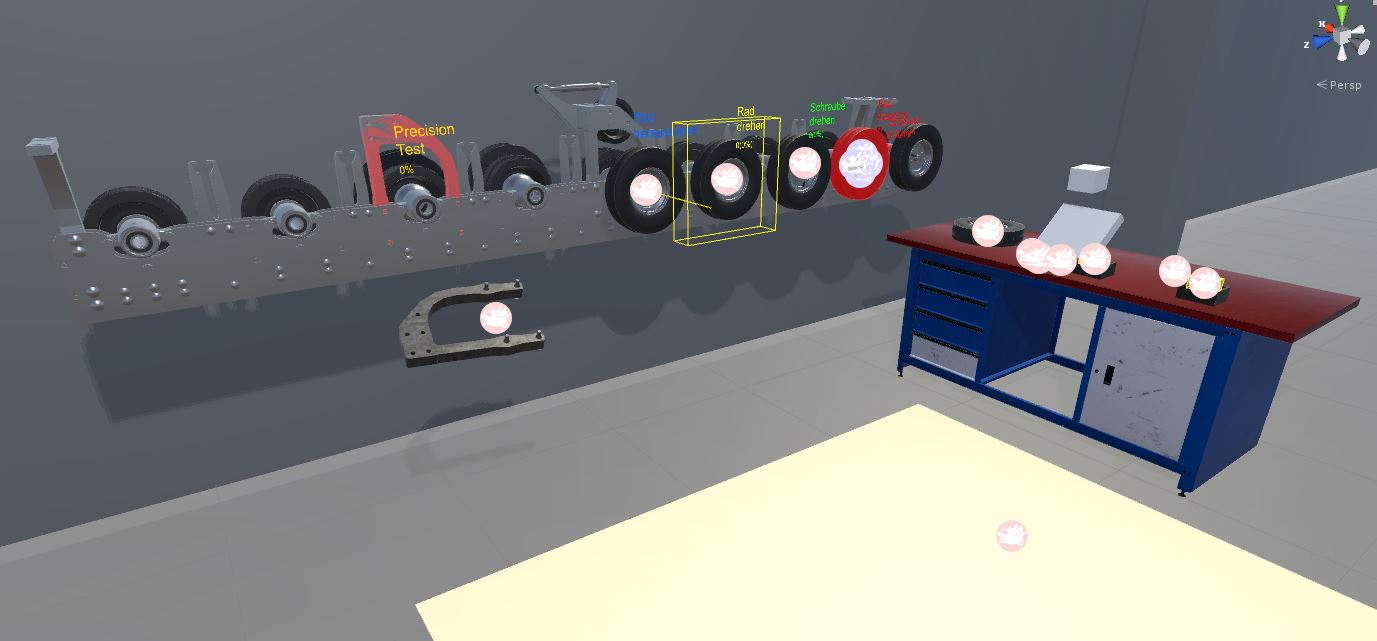

The aim of the third prototype was to build a virtual assembly line and test if the interactions are accurate enough for real world usage. Such a safe environment could then be used to train workers from all around the world.

We later showed the project to a big company that was very interested in this kind of project, but unfortunately I am not allowed to share more details about this.

Here is an overview of all the interactions I created for this prototype:

- pressing buttons spawns bolts that you can pick up and put into predefined openings in the wheels

- if you put a bolt at the right place you even have to rotate it inwards with the appropriate gesture you would use in the real world

- one of the wheels has to be rotated exactly to a predefined percentage

- another one has an "explode view" that gets activated if you pull it out of the wall

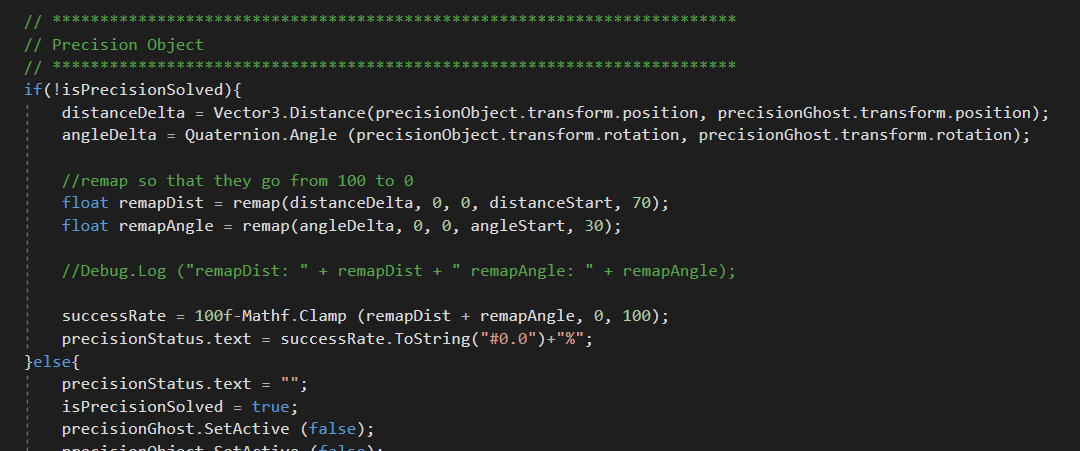

- the last station is a precision test, where you have to accurately fixate a metal part

The code for this was again quite simple - I just tested for the distance to an already perfectly placed model and also compared their angles.